One of the biggest problems with big data is figuring out how to use all of the information that you have. But before we can get to that, we have to get the data. Also, for a system to work well, it needs to be able to understand and show the data to people. Apache Kafka 아파치카프카 is an excellent tool for this.

What Exactly Is Apache Kafka?

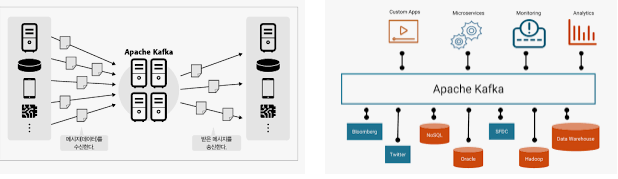

Apache Kafka 아파치카프카 is a data collection, processing, storage, and integration platform that collects, processes, stores, and integrates data at scale. Data integration, distributed logging, and stream processing are just a few of the many applications it may be put to use for. To fully comprehend Kafka’s actions, we must first understand an “event streaming platform.” Before we talk about Kafka’s architecture or its main parts, let’s talk about what an event is. This will assist in explaining how Kafka saves events, how events are entered and exited from the system, as well as how to evaluate event streams once they have been stored.

Kafka stores all received data to disc. Then, Kafka copies data in a Kafka cluster to keep it safe from being lost. A lot of things can make Kafka sprint. It doesn’t have a lot of bells and whistles, so that’s the first thing you should know about it. Another reason is the lack of unique message identifiers in Apache Kafka(아파치카프카). It takes into account the time when the message was sent. Also, it doesn’t keep track of who has read about a specific subject or who has seen a particular message. Consumers should monitor this data. When you get data, you can only choose an offset. The data will then be returned in sequence, beginning with that offset.

Apache Kafka Architecture

Kafka is commonly used with Storm, HBase, and Spark to handle real-time streaming data. It can send a lot of messages to the Hadoop cluster, no matter what industry or use case it is in. Taking a close look at its environment can help us better understand how it works.

APIs

It contains four main APIs:

· Producer API:

This API allows apps to broadcast a stream of data to one or more subjects.

· Consumer API:

Using the Consumer API, applications may subscribe to one or even more topics and handle the stream of data that is generated by the subscriptions

· Streams API:

One or more topics can use this API to get input and output. It converts the input streams to output streams so that they match.

· Connector API:

There are reusable producers as well as consumers that may be linked to existing applications thanks to this API.

Components and Description

· Broker

To keep the load balanced, Kafka clusters usually have a lot of brokers. Kafka brokers use zooKeeper to keep track of the state of their clusters. There are hundreds of thousands of messages that can be read and written to each Apache Kafka broker simultaneously. Each broker can manage TB of messages without slowing down. ZooKeeper can be used to elect the leader of a Kafka broker.

· ZooKeeper

ZooKeeper is used to keep track of and coordinate Kafka brokers. Most of the time, the ZooKeeper service tells producers and consumers when there is a new broker inside the Kafka system or when the broker in the Kafka system doesn’t work. In this case, the Zookeeper gets a report from the producer and the consumer about whether or not the broker is there or not. Then, the producer and the consumer decide and start working with another broker.

· Producers

People who make things send data to the brokers. A message is automatically sent to the new broker when all producers first launch it. The Apache Kafka producer does not wait for broker acknowledgements and transmits messages as quickly as the broker can manage.

· Consumers

Because Kafka brokers remain stateless, the consumer must keep track of the number of messages consumed through partition offset. If the consumer says that they’ve read all of the previous messages, they’ve done so. The consumer requests a buffer of bytes from the broker in an asynchronous pull request. Users may go back or forward in time inside a partition by providing an offset value. The value of the consumer offset is sent to ZooKeeper.

Conclusion

That concludes the Introduction. Keep in mind that Apache Kafka 아파치카프카 is indeed an enterprise-level message streaming, publishing, and consuming platform that may be used to link various autonomous systems.